Fabian Sommer/picture alliance via Getty Images

- A new Twitter "Safety Mode" could automatically block harmful trolls looking to engage with users.

- The feature was codeveloped with digital safety and mental-health experts.

- The push for increased safety features on social media comes as companies face criticism for not doing enough.

- See more stories on Insider's business page.

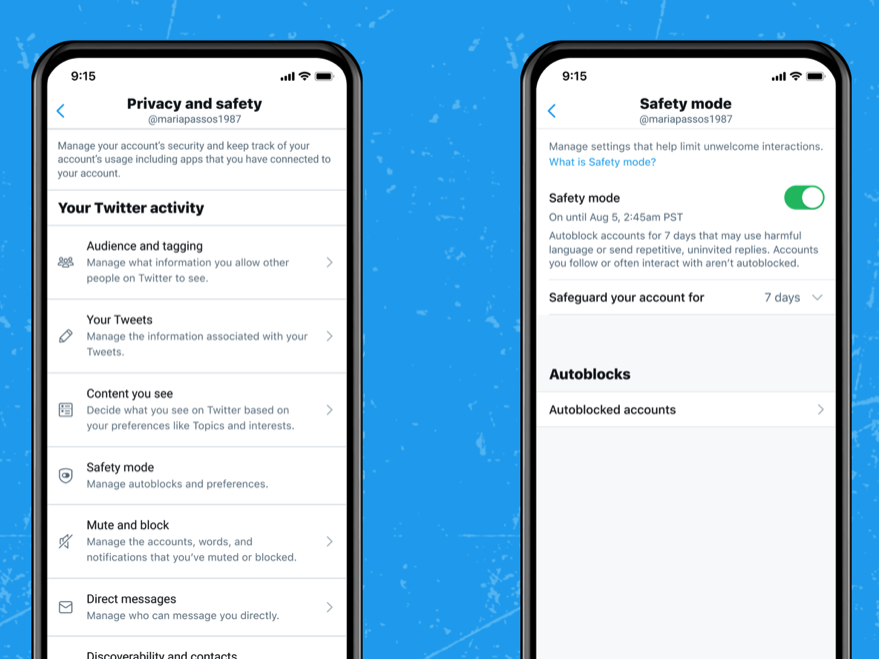

Twitter is taking another step toward moderating trolls, bots, and disruptive interactions on its platform with its upcoming "Safety Mode" feature.

By turning on "Safety Mode" in settings, a user can have Twitter scan for and automatically block interactions from "harmful" accounts for seven days, the company said. Accounts that are blocked with this feature will be unable to follow a user, see a user's tweets, or send direct message, albeit only temporarily.

Twitter's algorithms can assess the likelihood that a Twitter account would negatively engage with a user – from sending repetitive, unwanted tweets or replies, to outright insults or hateful speech. The algorithm takes into account the relationship and interactivity between users, so that it doesn't accidentally block familiar, friendly accounts that the system may tag as potentially harmful.

"Our goal is to better protect the individual on the receiving end of Tweets by reducing the prevalence and visibility of harmful remarks," said Twitter Product Lead Jarrod Doherty.

During development, the team behind "Safety Mode" worked with experts in online safety, mental health, and human rights. The Twitter Trust and Safety Council, comprised of independent advisory groups from around the world, were also involved to "ensure [the feature] entails mitigations that protect counter-speech while also addressing online harassment towards women and journalists," said Article 19, a digital and human rights organization on the council.

The social-media giant has taken steps to try limiting the amount of harmful information and rhetoric circulating on its platform. Twitter launched wider efforts in 2017 to build algorithms that could identify and disrupt abusive content. In 2018, the company released data on over 10 million tweets linked to foreign misinformation campaigns.

More recently, Twitter challenged more than 11.7 million accounts, suspended more than 1,496 accounts, and removed more than 43,010 pieces of content globally referring to COVID misinformation since introducing its list of guidelines in 2020, a spokesperson told Insider.

Twitter and other social-media companies have upped their investments in technology that can subdue internet trolls after facing continuing criticism for not doing enough to crack down on terms of service violators. Third-party researchers and services have also been developing real-time tools to track troll activity on platforms like Twitter, Facebook, and Reddit, specifically in regards to disinformation campaigns.

"Safety Mode" is being tested with small feedback groups and is not widely available, but the company plans to bring it to everyone once adjustments and improvements have been made.

Dit artikel is oorspronkelijk verschenen op z24.nl